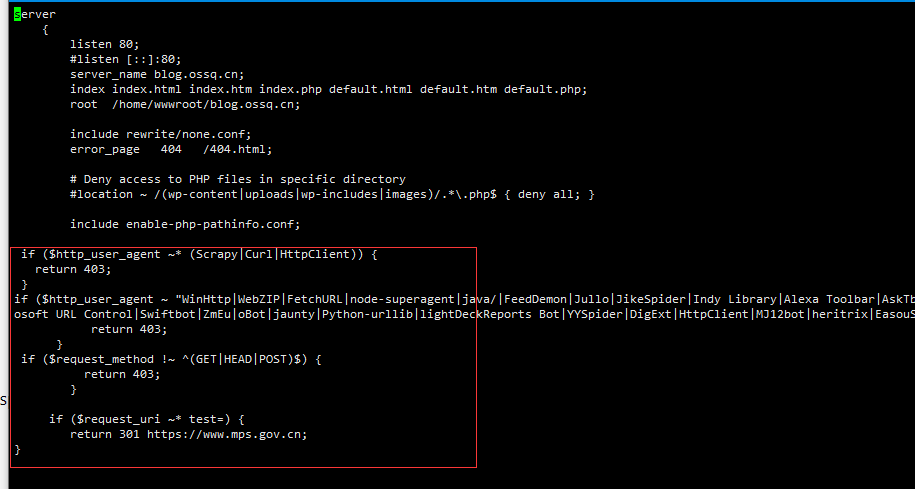

Nginx增强网站的安全性规则

1.禁止Scrapy等工具的抓取

if ($http_user_agent ~* (Scrapy|Curl|HttpClient)) {

return 403;

}

2.禁止指定UA及UA为空的访问

if ($http_user_agent ~ "WinHttp|WebZIP|FetchURL|node-superagent|java/|FeedDemon|Jullo|JikeSpider|Indy Library|Alexa Toolbar|AskTbFXTV|AhrefsBot|CrawlDaddy|Java|Feedly|Apache-HttpAsyncClient|UniversalFeedParser|ApacheBench|Microsoft URL Control|Swiftbot|ZmEu|oBot|jaunty|Python-urllib|lightDeckReports Bot|YYSpider|DigExt|HttpClient|MJ12bot|heritrix|EasouSpider|Ezooms|BOT/0.1|FlightDeckReports|Linguee Bot|^$" ) {

return 403;

}

3.禁止非GET|HEAD|POST方式的抓取

if ($request_method !~ ^(GET|HEAD|POST)$) {

return 403;

}

4.访问链接里含有 test 直接跳转地址

if ($request_uri ~* test=) {

return 301 https://127.0.0.1;

}

5.请求这些敏感词时跳转下载10g文件

if ($request_uri ~* "(.gz)|(\")|(.tar)|(admin)|(.zip)|(.sql)|(.asp)|(.rar)|(function)|($_GET)|(eval)|(\?php)|(config)|(\')|(.bak)") {

return 301 http://lg-dene.fdcservers.net/10GBtest.zip;

}

- 禁止某个目录执行脚本 uploads|templets|data 这些目录禁止执行PHP

location ~* ^/(uploads|templets|data)/.*.(php|php5)$ {

return 444;

}